Choose your operating system:

Windows

macOS

Linux

Prerequisite Topics

This page assumes you have prior knowledge of the following topics. Please read them before proceeding.

This example goes over a very basic dialogue setup. For longer conversations, you will likely want to create a conversation manager system. In this scenario, we will set up a quest NPC and two possible playable characters, and show how using Dialogue Voices and Dialogue Waves enables you to adjust the quest giver's tone depending on who they are speaking to.

|

|

|

|

|---|---|---|

|

Quest NPC |

Adam |

Zoe |

Also, here's a snippet of our example design document:

- Quest NPC is a female soldier who wants to hire someone from Adam's team as backup on a supply escort mission.

- Adam is a mercenary who has a stealth-first, attacking only when necessary policy.

- Zoe is an ex-soldier who joined Adam's team after the last major Galactic Skirmish.1 - Create Dialogue Voices

In this example, we are using characters from the Mixamo Animation Pack , available for free on the Unreal Engine Marketplace. In the Mixamo Animation Pack, all the characters inherit from a general character Blueprint. Working in your own project, or with a C++ setup, you would need to adjust the following steps, but the primary concepts still apply.

Each character has their own value for a Dialogue Voice variable. Even if you don't localize your audio content, having a distinct voice for each character means that you can associate a given voice actor's recordings with that voice, so Zoe will always sound like Zoe.

-

In the MixamoAnimPack folder in the Content Browser , double-click on MixamoCharacter_Master to open the Blueprint.

![MixamoMaster.png]()

-

Add a new variable to the Blueprint.

![NewVariable.png]()

-

Name the new variable DialogueVoice , then set its type to a DialogueVoice Reference .

![DialogueVoiceRef.png]()

-

Compile and save your Blueprint. Now, we need to create the Dialogue Voice assets to use for each of our three characters.

-

Go back to the Content Browser , and create a new Dialogue Voice asset.

![DialogueVoiceNew.png]()

-

Name the new asset QuestNPC , then double-click to open it.

-

Referring to our design document, we know that the quest NPC's voice is Feminine and Singular . Use the drop-down menus to set the Gender and Plurality accordingly.

![QuestNPC.png]()

-

Repeat the process two more times to create a Dialogue Voice asset for Adam that is Masculine and Singular , and a Dialogue Voice asset for Zoe that is Feminine and Singular .

![AdamVoice.png]()

![ZoeVoice.png]()

-

Save and close all your new Dialogue Voice assets.

Now that our Dialogue Voices have been created, we can associate them with our characters.

-

Back in the Content Browser , in the Mixamo_SWAT folder, open the Mixamo_SWAT Blueprint.

![MixamoSWAT.png]()

-

If your Blueprint is not a data-only Blueprint and the defaults are not already visible, click on the Class Defaults button in the Toolbar to open the Blueprint's default properties.

![ClassDefaults.png]()

-

Set Dialogue Voice in the Details panel to Quest NPC .

![DialogueVoiceSetSwat.png]()

-

Repeat the process to set the Mixamo_Adam Blueprint's Dialogue Voice to Adam , and to set the Mixamo_Zoe Blueprint's Dialogue Voice to Zoe .

![AdamVoiceSet.png]()

![ZoeVoiceSet.png]()

To help identify your Dialogue Voice characters:

-

Place your character in the world, then frame them in the viewport.

-

Right-click on the Dialogue Voice asset in the Content Browser .

-

Select Asset Actions > Capture Thumbnail .

![ThumbnailCapture.png]()

2 - Build Dialogue Waves

For this example, we are just going to implement a greeting from Quest NPC to Adam and Zoe. For more information about complex dialogue, see the "Next Steps: Sound Cues and Dialogue" section below. Each line of dialogue needs a Dialogue Wave asset associated with it.

-

Go back to the Content Browser , and create a new Dialogue Wave asset.

![DialogueWaveNew.png]()

-

Name the new asset QuestGreeting , then double-click to open it.

-

For the Spoken Text , enter "Hey! Could you come over here? I need your help with something important." Although the same text would be spoken when the NPC is talking to Adam and Zoe, the game design document gives us some hints that the way the text is spoken might be different for each listener. That means we should set up two different Dialogue Contexts .

-

One context is already created by default. Fill in the Speaker entry with Quest NPC .

![Context1Speaker.png]()

-

Click on the plus sign icon to add a listener.

![Context1ListenerAdd.png]()

-

Set the Directed At entry to Adam .

![Context1Listener.png]()

-

Click on Add Dialogue Context to add a new Dialogue Context .

![AddDialogueContext.png]()

-

Set this context's Speaker to Quest NPC , and Directed At to Zoe .

![ZoeContext.png]()

We could also make some notes about how the voice actor should sound friendlier toward Zoe, as they have a shared military background, and be more abrupt with Adam, who she doesn't trust because of his mercenary past. These would go in the Voice Actor Direction field. Finally, after the voice actor recordings come back, we would import those as Sound Waves and set them in the Sound Wave field for each context. In this example, we are not going to create Sound Waves , but you could use Sound Waves from the Starter Content to test.

The Dialogue Wave is also where you can set a Subtitle Override . This is useful for effort sounds, as well as some other cases, such as characters speaking in a foreign language not known to the player.

3 - Set the Context

Now that we have our Dialogue Voices and Dialogue Wave set up, we can give our quest NPC some logic to create the right context for her greeting. Again, a Dialogue Context involves at least two Dialogue Voices , a Speaker and at least one Dialogue Voice that the dialogue is Directed At .

-

Add a Box component to the Mixamo_SWAT Blueprint.

![AddBoxComponent.png]()

-

In the Viewport , adjust the Box component so it is around the height of the character, and extends some distance in front of her. Make sure it doesn't overlap her, or set her Capsule component to not generate overlap events, or she will trigger the conversation logic herself.

![TriggerVolume.png]()

-

Add a new Dialogue Wave variable named Greeting .

-

Compile your Blueprint and set the default value to the QuestGreeting Dialogue Wave you made previously.

![QuestGreetingDetails.png]()

-

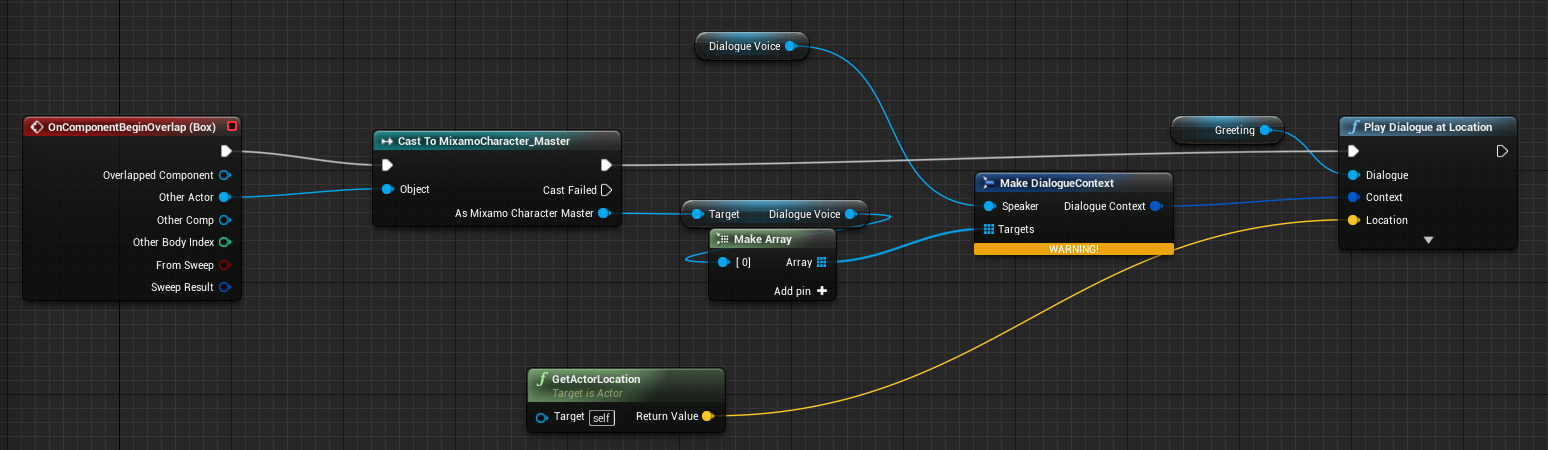

Set up the following Blueprint graph.

This graph:

-

Fires after something overlaps the Box component

-

Casts the Overlapping Actor to our MixamoCharacter_Master , where we added the Dialogue Voice variable

-

Gets the voice from the Overlapping Actor and uses it as the Target for our Dialogue Context .

-

Uses the Quest NPC's voice for the Speaker for our Dialogue Context .

-

Plays the Dialogue Wave named Greeting at our Quest NPC's location, using the correct **Dialogue Context**The Mixamo Animation pack comes with a Game Mode we can use to test our dialogue setup.

-

-

Open World Settings .

-

Set the GameMode Override to MixamoGame .

-

Set the Default Pawn Class to Mixamo_Adam or Mixamo_Zoe .

![SetDefaultPawn.png]()

Now, if the QuestNPC is placed in the level, you can run up to them with either Adam or Zoe and trigger the dialogue.

4 - Displaying Subtitles

Subtitles should be enabled by default, but if you need to check that they are on, there is a checkbox in Project Settings .

-

Open Project Settings .

-

Under General Settings , locate the Subtitles section, then make sure the Subtitles Enabled box is checked.

![SubtitlesOn.png]()

Now, when you trigger the Quest NPC's dialogue, the accompanying subtitle text shows at the bottom of the screen.

Subtitles will not display unless there is a Sound Wave set in your Dialogue Wave for the context that is currently happening.

5 - Next Steps: Sound Cues and Dialogue

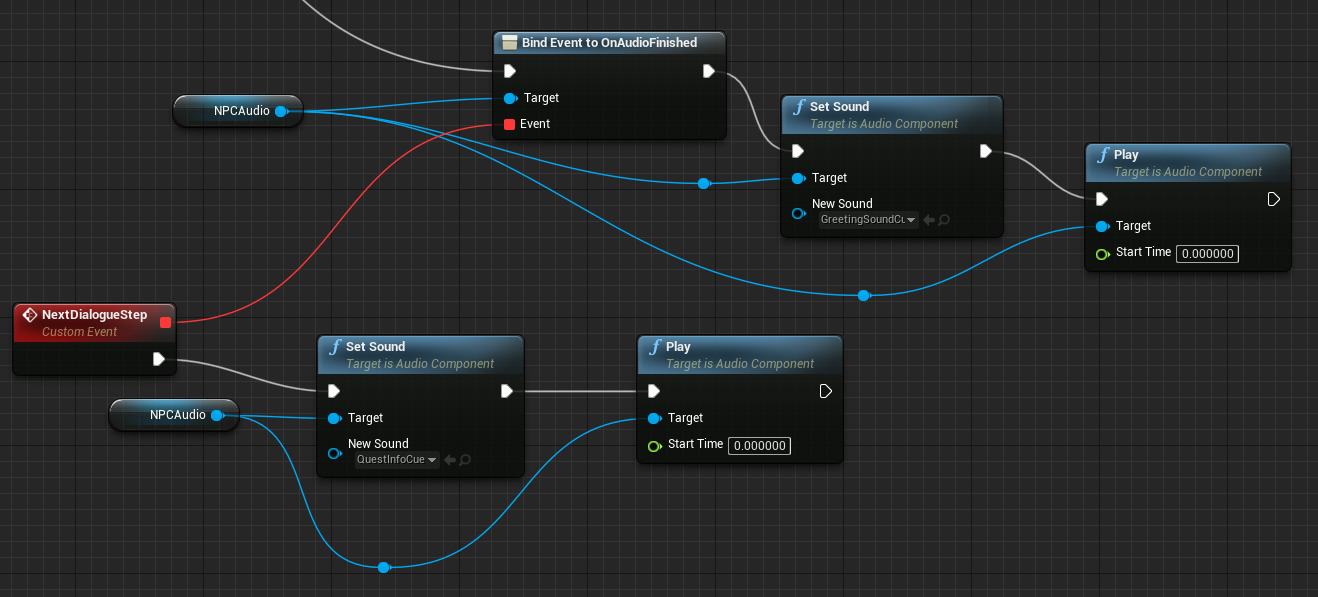

For a more complex conversation, you will likely want to use Sound Cues and Audio Components. Audio Components enable you to bind some functionality to when audio is finished playing, using the On Audio Finished delegate.

One thing to consider, however, is that you cannot dynamically change the Dialogue Context when using Sound Cues, so your Blueprints or C++ logic will look different than the above example.

To play Dialogue Waves using Sound Cues , there is a Dialogue Player node in the Sound Cue Editor .

Select the Dialogue Player node and look at the Details panel. Here is where you can set the Dialogue Wave to use, as well as the Speaker and Directed At entries for the Context .

Here is some example Blueprint logic that shows how you could bind events to play the next piece of dialogue after the previous audio finishes playing.