Choose your operating system:

Windows

macOS

Linux

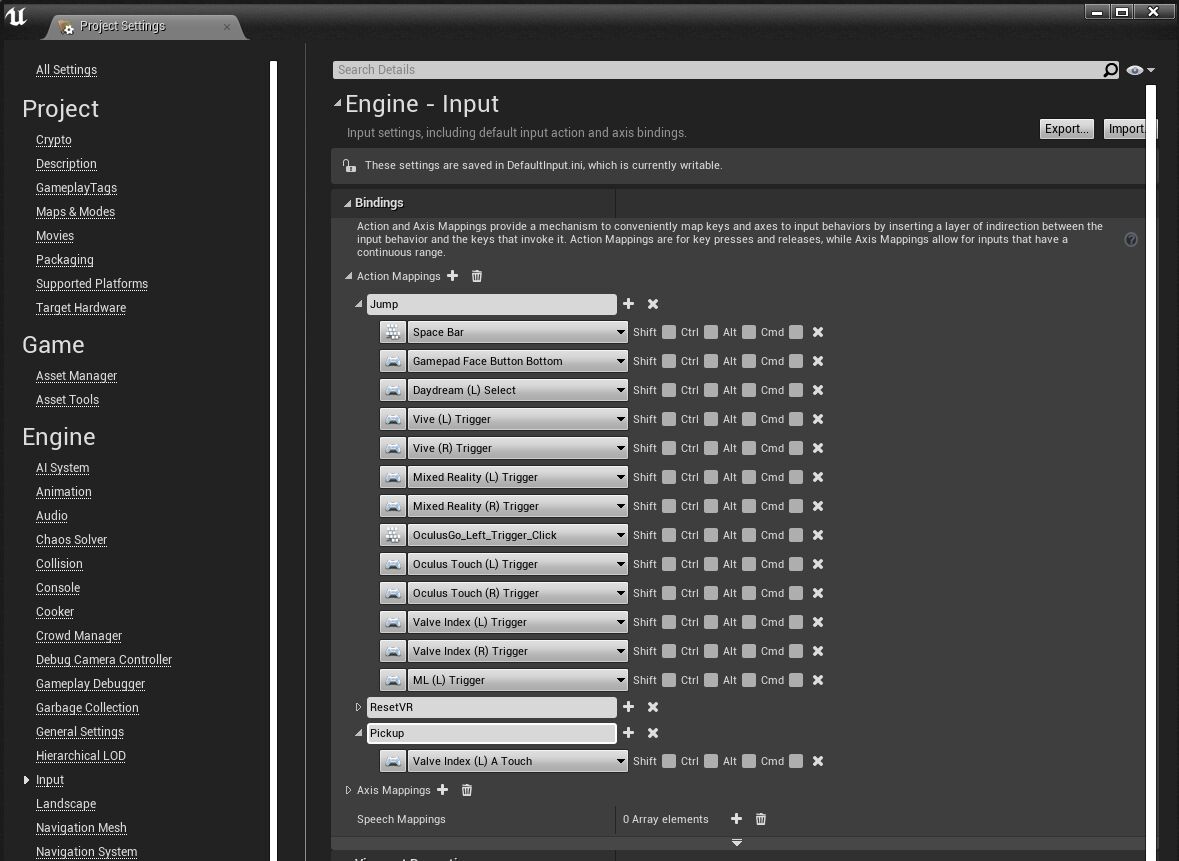

The OpenXR runtime uses interaction profiles to support various hardware controllers and provide action bindings for whichever controller is connected. OpenXR input mapping in Unreal Engine relies on the Action Mappings Input System to connect actions to the OpenXR interaction profiles. See Creating New Inputs for a guide on how to use the Action Mappings Input System.

The OpenXR input system is designed to provide cross-device compatibility by emulating any controller mapping that isn't explicitly specified with Action Mappings in the Unreal project. When emulating controller mappings, the OpenXR runtime chooses controller bindings that closely match the user's controller. Because OpenXR provides this cross-compatibility for you, you should only add bindings for controllers you support and can test with. Any bindings you specify for a controller define what actions are connected to that controller. If you only partially apply bindings to a controller, then the controller won't support any missing bindings. In the example below, the project has two actions: Jump and Pickup .

-

Jump is mapped to keys on multiple controllers, such as Vive Index (L) Trigger and Oculus Touch (L) Trigger .

-

Pickup is only mapped to Valve Index (L) A Touch . In this case, the OpenXR runtime will not emulate any of the other controllers for the Pickup action, because those controllers have bindings for Jump but not for Pickup . If the keys for the other controllers were removed from Jump , then the OpenXR runtime would be able to emulate the controllers for both Jump and Pickup .

Some runtimes may only support a single interaction profile and do not have the ability to emulate any other profile. It is recommended to add bindings for as many devices as you have access to and plan to support.

Poses

OpenXR provides two poses to represent how a user would hold their hand when performing the actions:

-

Grip: Represents the position and orientation of the user's closed hand in order to hold a virtual object.

-

Aim: Represents a ray from the user's hand or controller used to point at a target. See the OpenXR specification for more details on the two poses. In Unreal Engine, these two poses are represented as motion sources and are returned in the results when you call Enumerate Motion Sources , if they're available for your device.

Unreal Engine uses a different coordinate system than what's described in the OpenXR specification. Unreal uses the left-handed coordinate system: +X forward, +Z up, and +Y right.

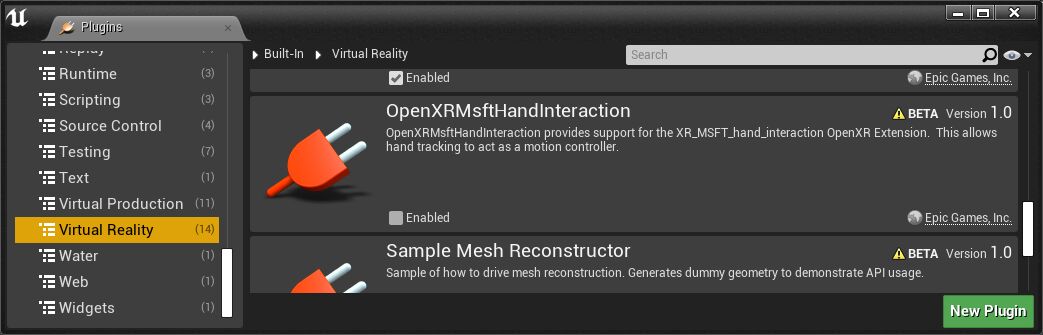

Enable the

OpenXRMsftHandInteraction

plugin to replicate the OpenXR grip and aim poses of tracked hands on runtimes that support this extension plugin, such as the

HoloLens

.

Motion Controllers

Motion Controllers can be accessed through a Motion Controller Component on your project's Pawn . The Motion Controller Component inherits from Scene Component , which supports location-based behavior, and moves based on the tracking data from the hardware. This component provides the functionality to render the Motion Controller and to expose the controller for user interactions defined by the Pawn. The following sections explain how to set up both of these functions.

Visualizing

In the Motion Controller Component's Details panel under the Visualization section, you can specify whether to display a model for the controller and which Static Mesh to use. There are also Material Overrides you can apply to the mesh.

Under the Motion Controller section of the Details panel, you can set the Motion Source for the Motion Controller. Motion Source is an FName that identifies which input source is expected in the XR experience to fetch its tracked position and rotation. The list of FNames for Motion Sources can be extended through additional plugins. To select the controller in one of the user's hands, choose the Left or Right Motion Source.

Accessing Details

Since the Motion Controller inherits from a Scene Component, it contains the transform of your device in the scene, which you can access through functions such as

GetWorldLocation

. You can also attach meshes and other Scene Components to the Motion Controller Component so virtual content appears to be attached to your hands.

To access device-specific information about the Motion Controllers, such as Grip Position or Hand Key Position , add Get Motion Controller Data and Break XRMotionControllerData nodes to your Blueprint. You will need to pass in information about which Hand to get the data from. To do this, call Get Motion Source on your Motion Controller variable to see whether it's set to Left or Right , and pass it in as the Hand input on the Get Motion Controller Data function.

Click image to enlarge

Orientation Between Plugins

OpenXR now defines a standard for motion controller behavior across platforms.

Since each SDK might have implemented its behavior differently before the OpenXR standard was created, there may be discrepancies in how the origin and orientation of the motion controller are represented between the plugins. The following images demonstrate how motion controllers can be oriented differently at runtime, using the Oculus VR plugin and the OpenXR plugin as examples.

The Motion Controller Component has a 3D Gizmo, and an Oculus Quest 2 controller model attached as a child Component.

-

The image on the left shows the behavior at runtime using the Oculus VR plugin. The origin of the controller is located at the position of the joystick and the orientation of the controller is angled between the Z and X axes.

-

The image on the right shows the behavior at runtime using the OpenXR plugin. The origin of the controller is located at the position of the grip button and the orientation of the controller is parallel to the X axis.

|

|

|

Hand Tracking

There are currently two platforms that support Hand Tracking with Unreal Engine: HoloLens 2 and Oculus Quest. The following sections explain how to get started with using hand tracking for user input on these platforms.

HoloLens 2

Hand Tracking on HoloLens 2 is available through the Microsoft OpenXR plugin. Hand Tracking functionality defined in the

Microsoft OpenXR

plugin is compatible with the

OpenXR

and

XRMotionController

functions. See Microsoft's

Hand Tracking Documentation

for more details on visualizing the user's hands and using them as input.

Oculus Quest

Hand Tracking on Oculus Quest is available through the Oculus VR plugin. To use Hand Tracking on Oculus Quest in an OpenXR app, both the OpenXR and Oculus VR plugins must be enabled. The APIs for Hand Tracking on Oculus Quest are provided through an Oculus-custom component. See Oculus's Hand Tracking Documentation for more details on visualizing the user's hands and using them as input.