Whisper-based Real-time Speech Recognition

Real-time speech-to-text transcription and alignment with multi-language support, based on OpenAI's Whisper model. No python or any separated servers needed.

- 支持的平台

- 支持的引擎版本4.27, 5.0 - 5.2

- 下载类型引擎插件此产品包含一款代码插件,含有预编译的二进制文件以及与虚幻引擎集成的所有源代码,能够安装到您选择的引擎版本中,并根据每个项目的需求启动。

描述

评价

提问

Demo video: Link

Documentation: Link

Free Demo project (exe): Link

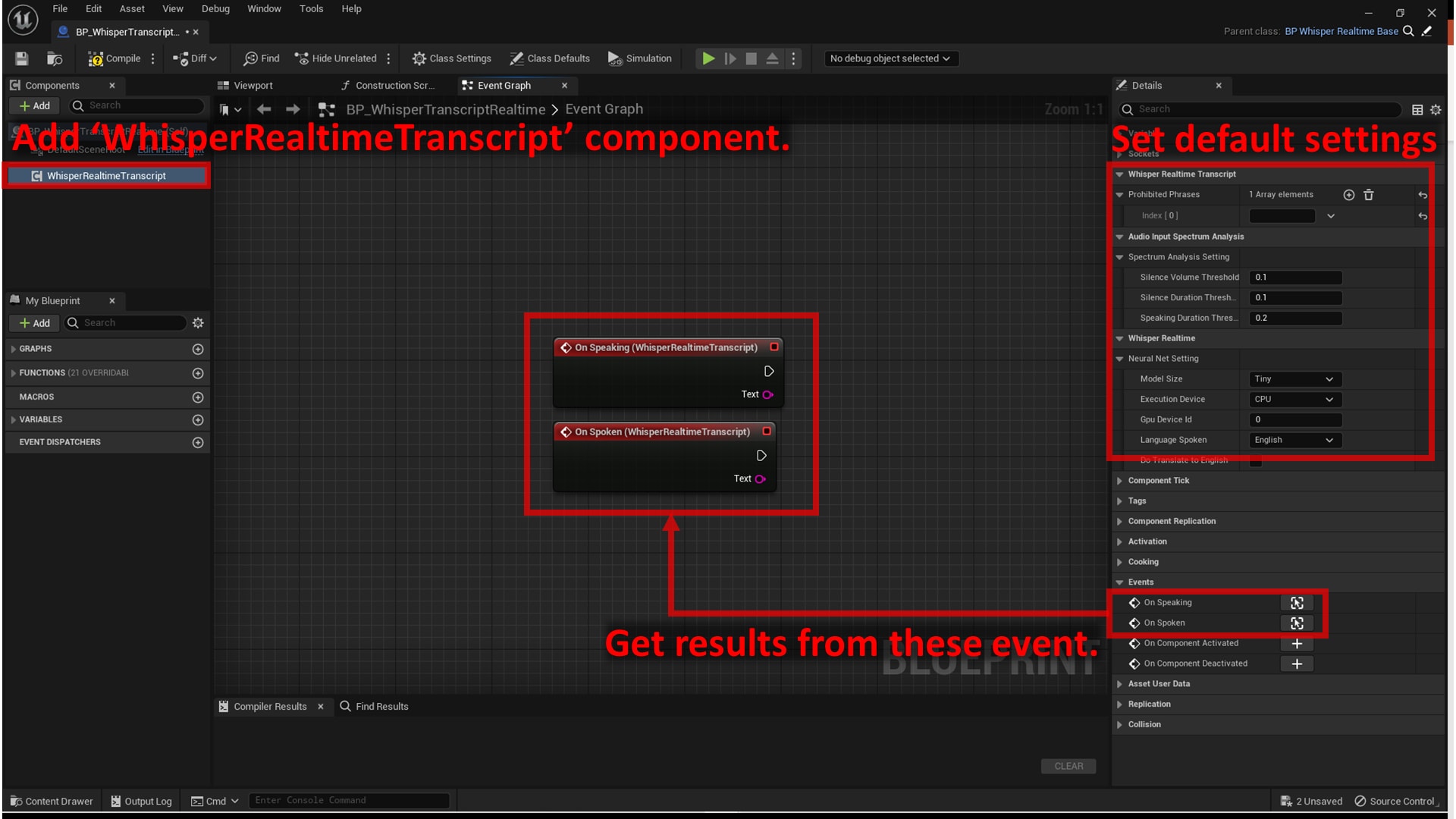

This plugin allows you to recognize speech in 99 languages, just by adding one component to your blueprint, without relying on any separate servers or subscriptions.

The machine learning model used in this plugin is based on OpenAI's Whisper, but has been optimized to run on the ONNX Runtime for best performance and to minimize dependencies.

Accuracy varies for each supported language. See the original paper for the accuracy of supported languages.

To use this with a GPU, you need a supported NVIDIA GPU and to install the following versions of CUDA and cuDNN.

- CUDA: 11.6

- cuDNN: 8.5.0.96

技术细节

Features:

- Real-time transcription from microphone input to text in 99 languages

- Real-time translation from microphone input to English text

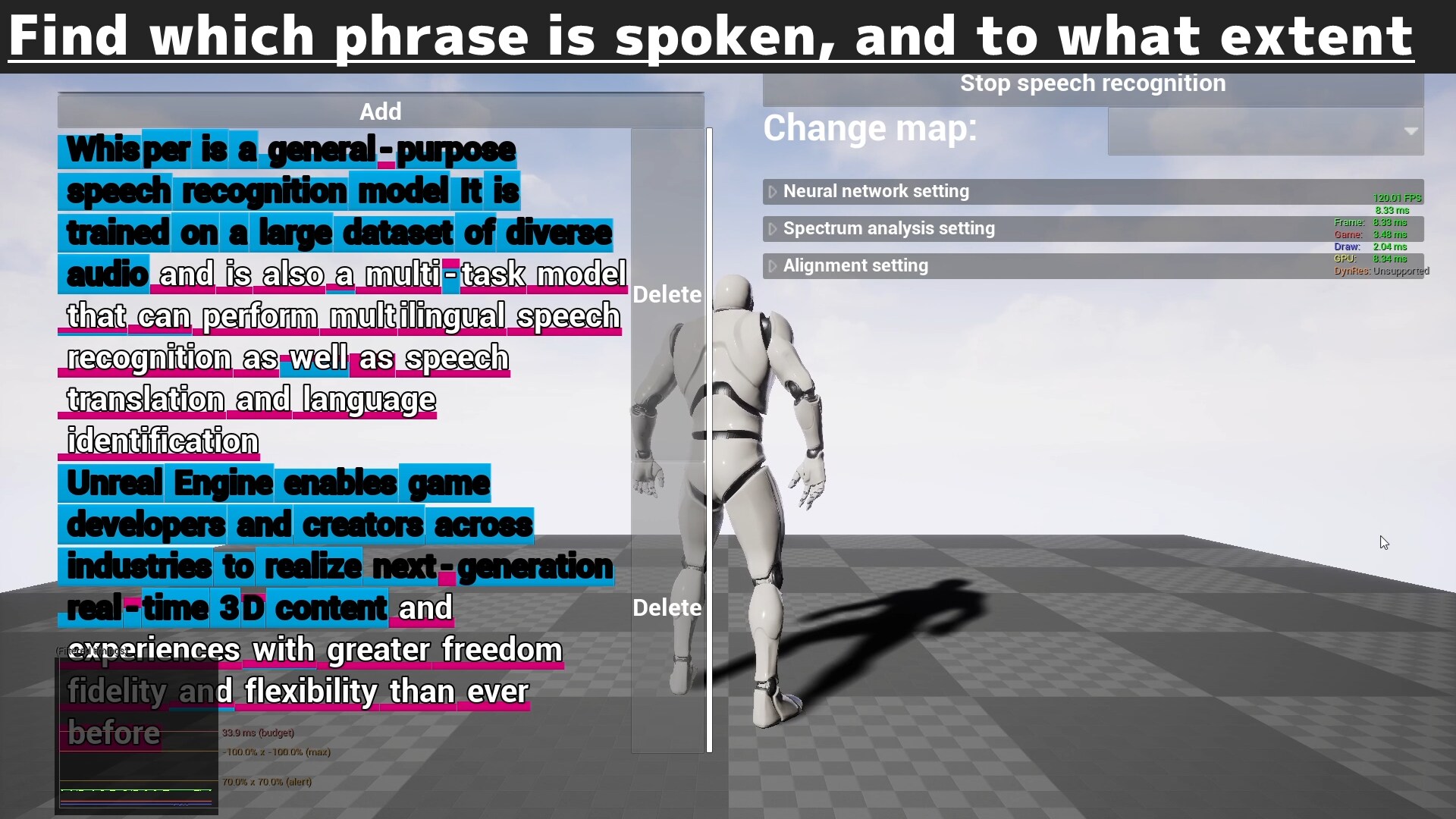

- Real-time alighment from microphone input to user-specified text

Code Modules:

- AudioInputSpectrumAnalysis (Runtime)

- ByteLevelBpeTokenizer (Runtime)

- CustomizedOnnxRuntime (Runtime)

- WhisperOnnxModel (Runtime)

Number of Blueprints: 2

Number of C++ Classes: 13+

Network Replicated: No

Supported Development Platforms: Windows 64-bit

Supported Target Build Platforms: Windows 64-bit

Documentation: Link

Important/Additional Notes:

- To use with GPU, you need to install CUDA 11.6 and cuDNN 8.5.0.96.