Whisper-based Real-time Speech Recognition

Real-time speech-to-text transcription and alignment with multi-language support, based on OpenAI's Whisper model. No python or any separated servers needed.

- 지원 플랫폼

- 지원 엔진 버전4.27, 5.0 - 5.2

- 다운로드 유형엔진 플러그인이 제품은 코드 플러그인과 함께 미리 빌드한 바이너리와 언리얼 엔진에 통합되는 모든 소스 코드를 포함하고 있으며 원하시는 엔진 버전에 설치하여 프로젝트에 따라 활성화할 수 있습니다.

설명

리뷰

질문

Demo video: Link

Documentation: Link

Free Demo project (exe): Link

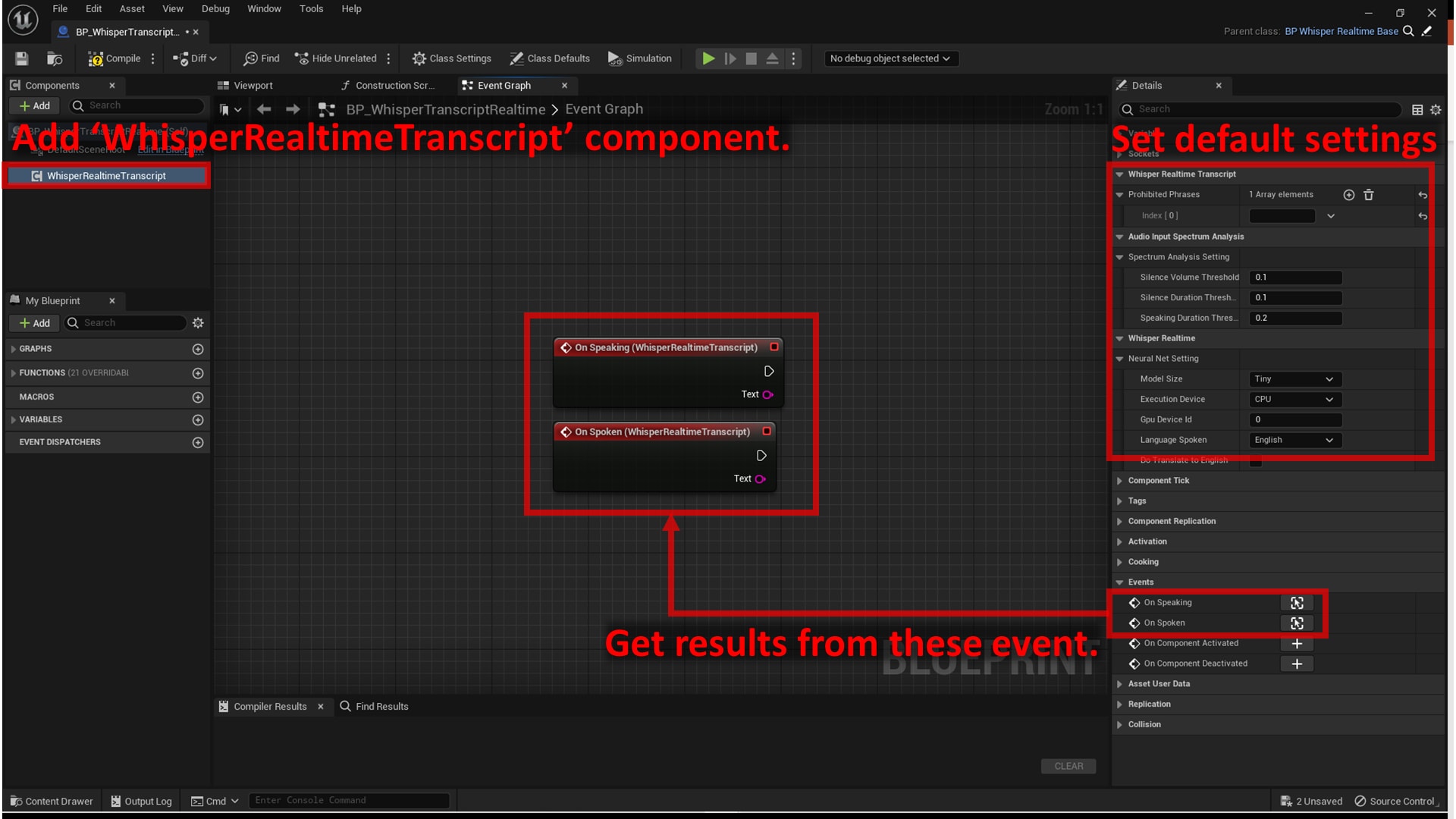

This plugin allows you to recognize speech in 99 languages, just by adding one component to your blueprint, without relying on any separate servers or subscriptions.

The machine learning model used in this plugin is based on OpenAI's Whisper, but has been optimized to run on the ONNX Runtime for best performance and to minimize dependencies.

Accuracy varies for each supported language. See the original paper for the accuracy of supported languages.

To use this with a GPU, you need a supported NVIDIA GPU and to install the following versions of CUDA and cuDNN.

- CUDA: 11.6

- cuDNN: 8.5.0.96

기술적 세부사항

Features:

- Real-time transcription from microphone input to text in 99 languages

- Real-time translation from microphone input to English text

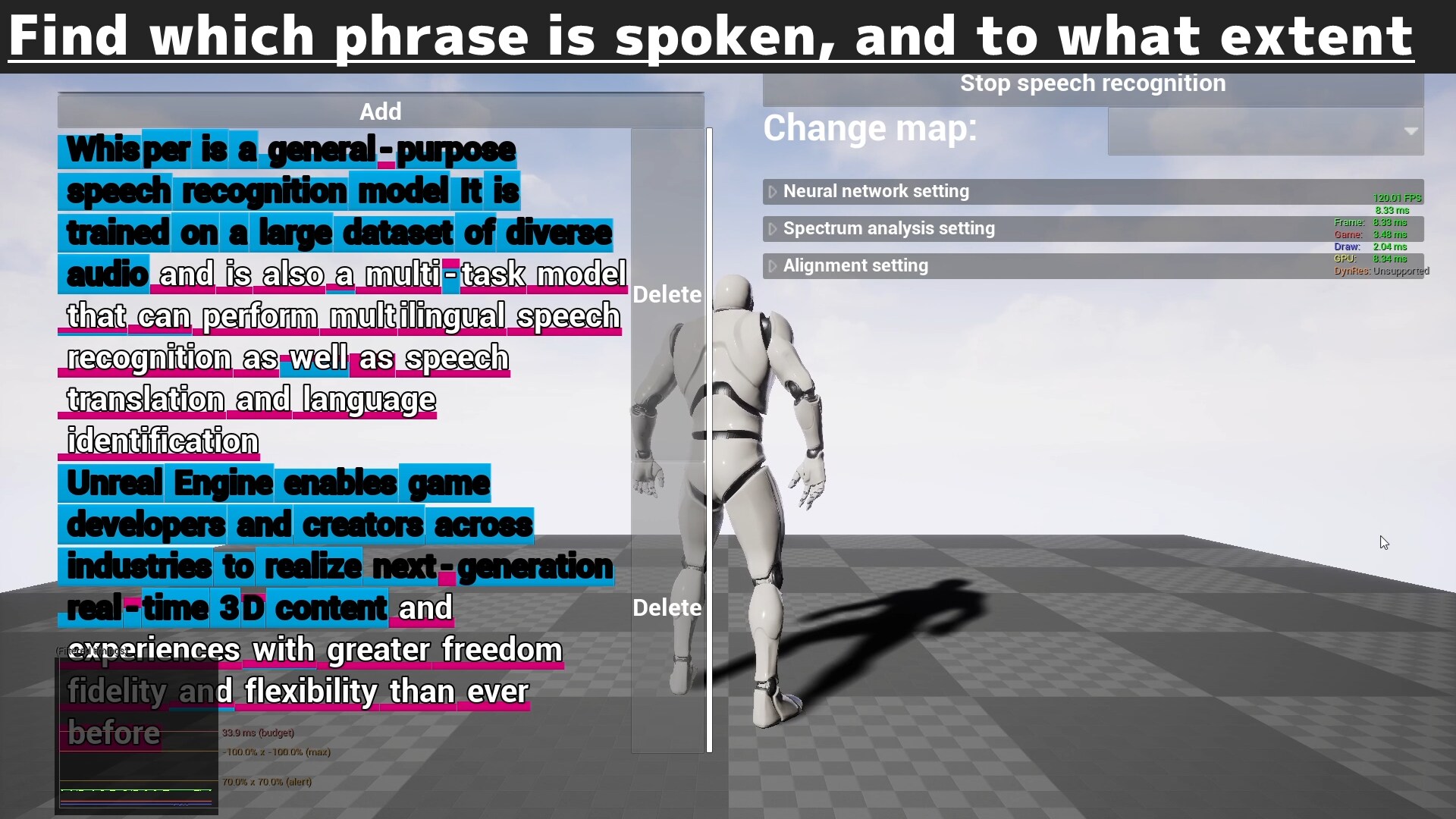

- Real-time alighment from microphone input to user-specified text

Code Modules:

- AudioInputSpectrumAnalysis (Runtime)

- ByteLevelBpeTokenizer (Runtime)

- CustomizedOnnxRuntime (Runtime)

- WhisperOnnxModel (Runtime)

Number of Blueprints: 2

Number of C++ Classes: 13+

Network Replicated: No

Supported Development Platforms: Windows 64-bit

Supported Target Build Platforms: Windows 64-bit

Documentation: Link

Important/Additional Notes:

- To use with GPU, you need to install CUDA 11.6 and cuDNN 8.5.0.96.